Share Post:

The music industry stands at a turning point. Technology is not just changing how we listen—it’s now reshaping how music gets made.

One pressing question has taken center stage: can listeners really spot the difference between a song composed by a machine and one crafted by a human?

As artificial intelligence models become more advanced, some tracks written by software are now topping playlists.

But the ears of real fans may still hold the power to tell them apart.

Key Highlights

- Machines can now produce full songs, including melodies, lyrics, and vocals.

- Listeners often fail to notice whether a song came from software or a studio.

- Emotional tone and lyrical nuance remain difficult for software to replicate.

- Trained tools analyze musical and lyrical structure for digital fingerprints.

- Human composers still dominate genres that require improvisation and subtlety.

- The real test may not be accuracy—but emotional connection.

How Music Created by Algorithms Actually Works

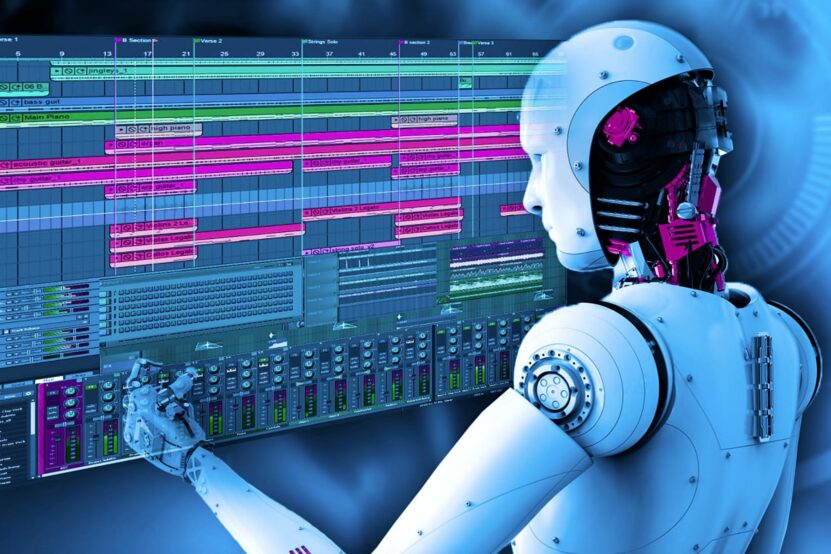

Artificial intelligence tools don’t just remix. They compose. Tools trained on vast libraries of human songs now craft melodies, lyrics, chords, and rhythms from scratch. Some even simulate vocal performances.

Large language models process thousands of pop songs, rap verses, and classical pieces to learn musical grammar. The goal? Mimic human creativity with machine logic. But the final product doesn’t always pass for human-made.

Some tools generate songs based on genre, key, and emotional intent. Others produce lyrics first and wrap music around them. A few can create every element—beat, lyrics, harmonies, vocals—at once. That’s where the confusion starts.

Most software follows mathematical patterns. Music created this way often sounds polished, but overly structured. The output lacks the quirks and imperfections that define a personal touch. Yet, many listeners miss those cues entirely.

Can You Actually Tell the Difference?

Here’s the problem: you think you can tell. But can you really?

A study by researchers from Claremont Graduate University showed that listeners struggle to consistently identify if a piece of music was machine-made or human-written. This goes beyond simple melody. Lyrics, cadence, phrasing—many listeners get it wrong.

One of the most accurate detection models available today is GPT Zero. Originally developed for detecting machine-written text, it now supports musical content through its analytical framework. It breaks songs into measurable components, assessing patterns of repetition, originality, and emotional fluctuation.

Unlike guessing by ear, this tool works on structural analysis rather than intuition.

GPT Zero employs a multi-stage process using DeepAnalyse™ Technology. It compares material to known artificial outputs across platforms like GPT 4, Gemini, and LLaMa. What sets it apart is its ability to reduce false positives and identify digital traits buried deep in the musical structure.

So yes, tools can help. But humans? Less reliable. Even trained musicians sometimes mistake a synthetic composition for a soulful ballad.

Where Machines Excel—and Where They Fail

Machines win on volume and consistency. They can write thousands of melodies in seconds. Need ten trap beats by lunch? No problem. But emotional range and spontaneous creativity? That’s where machines fall short.

Machine Strengths:

- Perfect timing

- Endless idea generation

- No fatigue or emotional bias

Machine Weaknesses:

- No true inspiration

- Struggles with layered metaphor or sarcasm

- Poor at responding to real-time audience feedback

Music driven by personal pain, lived experience, or cultural nuance still escapes most artificial creators. They understand patterns, but not passion. They recognize tropes, but not trauma.

That’s why human-created tracks still dominate genres like jazz, soul, folk, and live improv. These styles depend on authenticity, not formula.

What Makes a Human Track Feel “Real”?

The answer lies in subtle choices. Human composers stretch or compress rhythms, add vocal imperfections, shift key unexpectedly, or change lyrics to reflect personal stories. Machines avoid risk. Humans lean into it.

In live settings, real musicians adjust in real time. They react to crowd energy. They miss a note and recover. That moment—the flaw followed by grace—is what connects artist to listener.

Also, consider lyrics. Machines tend to loop safe phrases and avoid complex emotions. A songwriter who lived through a breakup, a war, or a spiritual crisis will write words a model can’t reproduce.

Emotional authenticity doesn’t come from data. It comes from life. That’s hard to simulate.

So Why Do Some AI Tracks Still Go Viral?

Because production value fools people. When a generated track gets mastered by a skilled engineer and sung by a professional vocalist, the line blurs.

Listeners often associate “quality” with audio polish—not source authenticity. And if a song sounds good, people hit play again. Emotional truth becomes optional.

Platforms also play a role. TikTok trends reward simplicity and catchiness over lyrical depth. A catchy artificial tune with the right hook and hashtag can go viral in hours.

The Tools Experts Use to Test Song Origins

Professionals don’t rely on ears alone anymore. They turn to structured tools that examine the DNA of a track. Here’s what they look at:

1. Lyric Structure

Machines often repeat certain templates. Analysts scan for looped rhyme schemes or shallow metaphors.

2. Musical Arrangement

Human tracks show inconsistency. Machines rarely use syncopation, jazz-style offbeats, or tempo shifts mid-song.

3. Emotional Curves

AI-generated tracks often fail to follow emotional arcs. Analysts use waveform tools to map dynamic range across time.

4. Source Matching

Tools like GPT Zero compare tracks to massive databases of known machine outputs. They detect reused frameworks, lyric snippets, or chord progressions.

What Listeners Should Pay Attention To

Even if you’re not an expert, a few habits can sharpen your detection skills.

- Listen for emotional depth: Does the song say something personal?

- Pay attention to lyrical coherence: Is the story real or recycled?

- Check the performance: Does the singer sound like they mean it?

If something feels robotic, it probably is. If it hits too many clichés or sounds oddly perfect, question it. But remember—some humans write like machines, too.

Conclusion

Music is entering a new phase, shaped by code as much as culture. Technology can now build entire tracks from scratch. Yet human instinct still feels the difference.

Detection tools are helping the industry set boundaries, catch copycats, and highlight originality. But for everyday listeners, the challenge stays personal.

The future may hold more blends than battles. Humans and software will likely collaborate more than compete. But when it comes to real connection, emotion still wins. Machines may hit the notes—but only people live the story.

Related Posts:

- How Can You Skip Songs with AirPods Pro? - Here’s…

- How Do Guitar Strings Age and When Should You Change Them?

- Can the Orangewood Sage Mahogany Live Really…

- 20 Contemporary Christian Funeral Songs - Honoring…

- What Does “Skip to My Lou” Mean? - You'll Be Surprised!

- What Is Acoustic Guitar Scale Length and Why Does It Matter?